Saturday, June 29, 2013

Week 6: Exploring and Manipulating Spatial Data

This week's assignment focused on exploring and manipulating spatial data. We created a new geodatabase via our script and then populated it with feature classes created from existing shapefiles. Then we were introduced to cursors. We created a SearchCursor along with a snippet of SQL to find the cities that are county seats in the cities feature class. Finally, we populated a dictionary with city:population key:value pairs derived from our county seat query.

All and all a pretty straightforward lab. The only thing that really hung me up was forgetting to delete the cursor (which locked the gdb and caused me to wonder why my env.overwriteOutput=True wasn't working).

Saturday, June 22, 2013

Surface Interpolations

The first set of maps demonstrates different density and surface interpolation examples on the same set of shovel test data (total artifacts) created by Dr. Palumbo in Panama. I preferred the result from Kernel Density (with a radius of 50) as it seemed the most straightforward result. The interpolation surfaces (Kriging, IDW, Spline, and Natural Neighbor) use various mathematical models to create predictive surfaces.

The second poster focuses on data converted from AutoCAD for the Machalilla region. This was a useful exercise in understanding how to convert common data types to ArcGIS and then manipulate it by using tables and joins. One complicating factor was that the AutoCAD data has no coordinate system. The data appears to be in kilometers (probably former 17S UTM data set to a local datum). This allowed me to create a scale bar in kilometers. We also used the largest polygon in the conversion as the study area boundary and this was used for clipping the rasters. For reasons inexplicable, I had one clip exhibit "the donut effect" (kernel density) but the other clips do not.

Finally, the grads continued with the Machalilla data to create a series of Inverse Distance Weighting interpolations set to differing powers in an attempt to replicate the Peterson & Drennan (2005) article. The general idea is that social interaction occurs within communities (in this case, in the Regional Development Period) and that clusters occur across the landscape with a (subjective) distance (perhaps of < 2 km) acting as the limiting factor of daily communication between these community clusters. By applying an IDW with various weightings, we can find the smoothing point where community clusters reach this interaction limit and gain some understanding of how communities were manifest on the landscape. The power of 1 seemed to provide a good view that didn't have too much "steepness" or intensity while also smoothing out to about 2 km clusters. This seems to show intense community interaction in the southeast corner of the region that seems to sweep in a curve west and north to and up the coast. These seems to be an isolated region in the middle of this area of interconnectedness.

Finally, the grads worked some stats problems using the t-test. I liked the Drennan (1996) reading so much, I bought his book.

Digitizing Archaeological Maps and Data

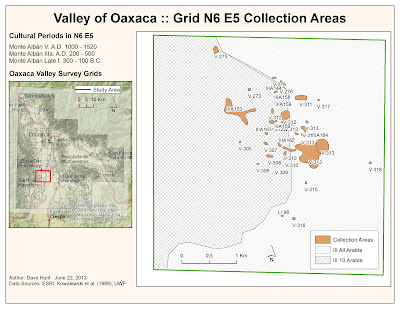

This week's assignment was pretty interesting and one that should be pretty useful for anyone working in a region that has had a lot of fieldwork in previous decades, specifically prior to computerization of records. The general idea was using older documents from a Valley of Oaxaca (Mexico) survey, digitizing and geoprocessing those maps onto an ArcGIS basemap, digitizing various aspects of the maps (sites and land use) and then using data from the original surveys to do analysis. The first map (above) shows my three assigned grid (N6 E4, N6 E5, and N6 E6) geoprocessed onto a digital topographic map and also the larger Valley of Oaxaca survey area.

The biggest challenge was getting the larger

survey map geoprocessed onto the base map.

My original base map selection contained far too many political objects

and the topographic features were hard to see and match up with the survey area

scan. I did find a base map that seemed

to have fewer objects cluttering the topography although it would be nice to

fine a base map that was just topographical without towns, roads, etc. Once the main survey scan was in place, it

was relatively simple matter to geolocate the assigned grids. The corners matched up quickly and easily and

there seemed to be good correspondence, particularly with the soil type maps.

The second part of the lab involved creating an individual map for each of our assigned grids (below). For these maps, we digitized the site collection areas as polygons and captured the cultural period data from field notes.

The biggest challenge was initially

understanding the original drawings.

However, once I entered “field note-taker” mode, it was pretty easy to

understand how they were labeling each collection area. Creating the collection area polygons

progressed fairly quickly though there were some annoyances with polygons

closing themselves too early and the stop/edit attribute table/delete bad

polygon/start editing again process. I

used label classes to deal with the multiple cultural periods per collection

area. Some areas are quite crowded and

therefore clutters. Overall, I was

pretty happy with the end result.

Finally, the grad students continued on to join some additional site data to the existing digitized collections for a specific cultural period. I didn’t have any IIIB artifacts in my three

assigned grids, so I used IIIA (of which I had a lot) to demonstrate the join

to the additional data table. The join

was quite straightforward and after segregating IIIA collection polygons to

their own layer, I simply adjusted the symbology to use the joined lopop field

and appropriately color grade the sites based on their estimated low

populations.

Thursday, June 20, 2013

Module 5 - GIS Programming

This week we started into actually using Python to drive functions within ArcMap. This exercise got us started on setting up a good script template with the relevant imports, environment setup, and printing out messages from completed functions. We ran three different functions: AddXY_management, Buffer_analysis, and Buffer_analysis with dissolve turned on. All in all, things went well this week and the results are shown above.

Wednesday, June 12, 2013

Georeferencing - Macao Then and Now

This week's assignment was pretty interesting and involved georeferencing historical maps to contemporary maps. The light yellow map above is a map of Macao (China) produced by Captain Cook (and William Bligh) in 1775. This map was obviously created at a time both latitude and especially longitude were difficult to determine with high accuracy. To "fit" this map to a modern map, we use Control Points to match landmarks that appear to be unchanged over the time between the two maps. A lot has gone on in Macao since 1775. In particular, there appears to be a lot of land reclamation that filled in areas between the islands of Macao. To find control points, it was especially useful to look at a topographical map and look for the landmarks that would have stood out to sailors mapping from a ship. In a few places (particularly to the north) we see a great deal of distortion. This is either the result of inaccurate distances in the original map or mismatch on my part. However, most of the smaller islands seem to be in about the right place.

This was a pretty fun assignment and one that will be super useful for anyone doing historic archaeology.

Monday, June 10, 2013

Participation Discussion

Photogrammetry

is the technique of creating a 3D model (or topology) from a series of

photographs. Basically, you take a series of photos of your subject from

varying angles. The object being modeled can be small (I have done it

with the skull of an extinct animal) to a landscape (the biggest I have

done is my backyard, but it can be done at a very large scale). From

this series of photos, computer software is used to determine the shape

of the object by comparing the same points in different shots and the

various angles of focus. The result is a "mesh" or 3D model of your

object.

Below is a very low resolution example of a photogrammetric model of a garden in my backyard created by taking about 50 images around the bird bath in the photo. It is a wire-frame model that can be fully rotated.

This article discusses the intersection of photogrammetric modeling and GIS. Just like geolocating a raster or historical image to a map, we can now geolocate a 3D mesh to a map. The uses of this will be many, but I am particularly interested in how it can be used in archaeology. Imagine being able to go out to a complex archaeological site (like a pueblo house structure), spend the day taking lots of photos from every conceivable angle, and then building a 3D model from those photos and plopping it directly into a map. It will be an amazing way to capture data and even to compare sites over time. And it won't be just for archaeology but environmental work, urban planning, pretty much anything you can imagine as a 3D model that you need to analyze spatially.

Photogrammetric Modeling + GIS by Rachel Opitz and Jessica Nowlin, 2012, ERSI News

Below is a very low resolution example of a photogrammetric model of a garden in my backyard created by taking about 50 images around the bird bath in the photo. It is a wire-frame model that can be fully rotated.

This article discusses the intersection of photogrammetric modeling and GIS. Just like geolocating a raster or historical image to a map, we can now geolocate a 3D mesh to a map. The uses of this will be many, but I am particularly interested in how it can be used in archaeology. Imagine being able to go out to a complex archaeological site (like a pueblo house structure), spend the day taking lots of photos from every conceivable angle, and then building a 3D model from those photos and plopping it directly into a map. It will be an amazing way to capture data and even to compare sites over time. And it won't be just for archaeology but environmental work, urban planning, pretty much anything you can imagine as a 3D model that you need to analyze spatially.

Photogrammetric Modeling + GIS by Rachel Opitz and Jessica Nowlin, 2012, ERSI News

Historical Archaeology

This week in Archaeological Applications, we focused on online sources of historical data and the lab specifically focused on the retrieval and use of historical maps and documents. There are numerous sources for materials that archaeologists may need. Our lab took us to Ancestry.com, Arcgis.com, and Google Maps Street View. From a technical perspective, we also explored including hyperlinks within a map.

Graphical design and layout is probably one of my weaknesses when it comes to maps, so more practice is good, I suppose. I do always feel like I'm cramming more items into a map than could possibly fit however.

Wednesday, June 5, 2013

Python Basics II

We continued with our intro to the basics of the Python language. This week we played a little with lists and then also started with the various iteration methods. We used a while loop to create a list of random numbers and also to control a block of code that looked for a specific number and removed all instances of it from the list. I also learned that you can't delete items from a list while you're iterating over the list itself (as in for eachitem in myList). That makes sense, but it also feels like a natural approach (one that I found a large number of people asking about on OverStack).

Subscribe to:

Posts (Atom)